Although the Pentagon blocked the use of DeepSeek and identified it as a threat to national security, the Big Techs seem to think otherwise. Before any legal action is taken for OpenAI’s allegation that DeepSeek used its data to pre-train its models, Microsoft and Nvidia have announced their support for consumers' access to DeepSeek’s R1 model.

Microsoft announced on Jan 29 that R1 is available on its Azure AI Foundry and GitHub, and customers can use “distilled flavors” of the DeepSeek R1 model to run locally on their Copilot+ PCs. One day later, Nvidia made it accessible to customers on its NIM platform.

This may have left some people scratching their heads. The emergence of DeepSeek sent the stock prices of those tech stocks to deep plunges didn’t it? Why are they helping DeepSeek now?

Several reasons might explain Microsoft and Nvidia NIM’s fast response to making DeepSeek available on their platforms.

Their Business Model as Platforms

DeepSeek’s R1 efficiently optimizes the computing model. Nvidia’s press release stated, “DeepSeek-R1 is a perfect example of this scaling law, demonstrating why accelerated computing is critical for the demands of agentic AI inference”.

As platforms for AI agents, they charge fees for apps that get on their platforms. Although Microsoft is a major investor in OpenAI, they don’t share the same interest.

“The ones to take the blows from DeepSeek should be closed-source AI firms such as OpenAI and Anthropics,” Taiwan-based AI company iKala CEO Sega Cheng wrote. “Since DeepSeek is open source and able to be deployed at low cost, everyone can tweak it and use it, including the US vendors, who are catching up.”

How about Nvidia? Is it not worried that DeepSeek’s emergence would decrease the demand for their AI GPUs?

The economic principle of Jevons Paradox — improving efficiency often increases demand — is believed to be the reason for optimism. Nvidia’s launch of Project Digits at CES 2025 in January also shows they have anticipated the trend of smaller models computed at the edge.

Data Security Issue Resolved?

Since R1 is made publicly available on open-source platforms, anyone can use it, examine it, and apply it according to the licensing conditions set by DeepSeek. However, shouldn’t we be cautious about the “national security risks” the US government has warned?

It is indeed a risk if sensitive data is stored on DeepSeek’s servers and the Chinese government gets access to it. Questions were raised regarding its data security. However, AI expert Ambuj Tewari, who is a professor of statistics at the University of Michigan, said if the model weights are open and it can be run on servers owned by U.S. companies, there is no such concern.

True Contribution

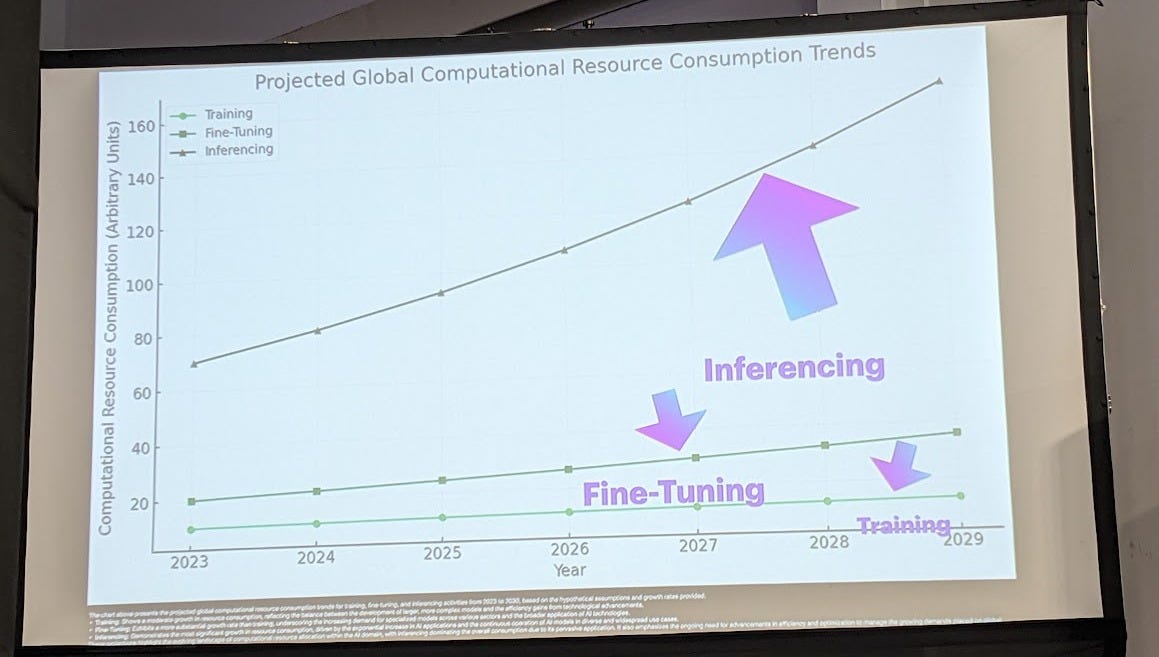

The most important reason perhaps lies in the fact that there are indeed breakthroughs achieved by DeepSeek that will benefit the development of inference computing. Experts pointed out that inferencing consumes much more resources than training and fine-tuning (See chart in the photo). That photo was taken in April 2024 at an AI forum in Taipei. DeepSeek’s breakthrough in distilling R1 into Llama 3 and Qwen helps reduce energy consumption by making AI more accessible. SemiVision explains that during the training process, FP8 technology is utilized—a low-precision data format that significantly reduces memory demands while improving efficiency. “Instead of needing a supercomputer, you can run a powerful model on a single GPU,” according to Tahir.

However, J. C. Shen, a Professor at York University in Ontario Canada, pointed out that all companies are working to improve the efficiency of their algorithm, and DeepSeek is not the only company making progress in optimizing inference computing. The LLM players are making the same efforts too. “When investigating the cost of GPT-4 in a large model, for example, SemiAnalysis found that improvements in the algorithm reduced the cost by a factor of 10 and increased the capacity of the model.”

Can DeepSeek be Trusted?

Nevertheless, there are still matters that make people uneasy about using DeepSeek. How about the origin of its data? Even though it claimed in its R1 paper that knowledge was distilled from R1 to LLaMA and Qwen to enhance the reasoning capabilities of the latter models, instead of the other way around, it did not reveal the pre-training data of V1, V2, and V3, the foundations of R1.

Some asked why DeepSeek’s data seems to have stopped updating since July 2024. It might be a coincidence, but OpenAI also cut China’ access to its API service in July 2024, according to Global Times, a Chinese CCP media.

To make a case, OpenAI must provide strong evidence to prove its accusations due to the presumption of innocence principle. However, experts said it would be difficult since only the final model developed by DeepSeek is public and not its training data. However, people don’t seem to mind where DeepSeek got its data for pre-training and distillation before the final model. There were too many download requests that crashed DeepSeek servers for some time.

DeepSeek has not revealed all the information about V3 and R1 to the world. SemiAnalysis reported that High Flyer, the mother company of DeepSeek, purchased 10,000 A100 GPUs in 2021 before any export restrictions. While Scale AI CEO Alexandr Wang’s claim that DeepSeek has access to around 50,000 H100 chips is not confirmed, SemiAnalysis said they are confident that the Chinese AI firm must have 50,000 Hopper GPUs. The H800s have the same computational power as H100s, but lower network bandwidth.

The $5.6 million cost includes only the cost of the GPUs for the pre-training runs, which is a small fraction of the total cost of the model and does not include significant expenses such as R&D and TCO for the hardware itself. It is estimated that well over $500 million is spent on hardware alone in the model development process to develop new architectural innovations.

While OpenAI faces challenges in proving its allegations, the overwhelming demand for DeepSeek’s models suggests that many users prioritize access and performance over transparency. The U.S. and its allies may only hold a narrow lead of 3-6 months. There is little room for complacency. With major players like Microsoft and Nvidia backing DeepSeek, its role in the AI landscape is undeniable—whether as a catalyst for innovation or a point of geopolitical contention.

If you have missed our guest post co-authored with AI Supremacy, the audio version is still available. Have a listen here: