The Super Bowl of AI: Decoding Nvidia's GTC 2025 Keynote

Accelerated Computing and Key Concepts Explained

Thanks to the lightning speed of AI development, Nvidia’s GTC is getting bigger and bigger each year, described as the “Super Bowl of AI”, exceeding last year’s “Woodstock of AI”. Having sifted through massive information, here are the key insights TechSoda drew from Nvidia CEO Jensen Huang’s keynote speech.

If we are to tell you in one sentence about GTC 2025, here’s what caught our eyes: “As accelerated computing will soon reach critical mass and AI undergoes radical change, Nvidia is driving the future forward.”

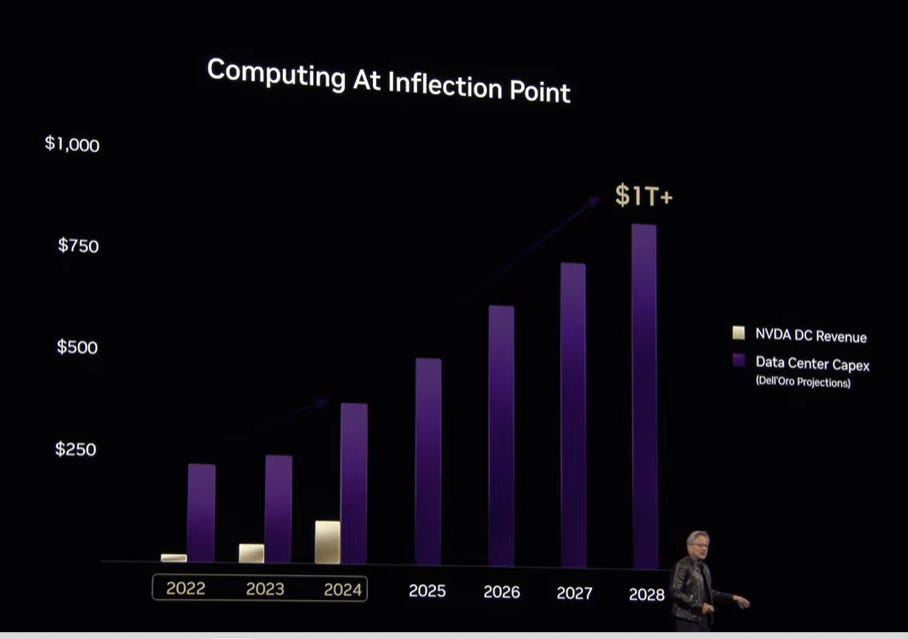

And “trillion” is the keyword in this keynote speech. Jensen mentioned “trillion” at least 16 times! Trillion tokens, trillion parameters, trillion transistors, robotics is the next trillion dollar business, and trillion floating point operations per second! He had mentioned that he expects data center build-out to reach a trillion dollars by 2030, and this year he said, “I am fairly certain we're going to reach that very soon.”

Since Accelerated Computing is a holistic approach, Nvidia is combining hardware, software, and domain-specific optimizations to unlock AI’s potential across industries, from data centers to edge networks. And in the GTC 2025, it was announced that Cisco, Nvidia, T Mobile, Cerberus, ODC, are going to build a full stack for radio networks in the United States, to put AI on the edge. The significance of it? Autonomous cars will be big business for Nvidia.

According to Jensen, Nvidia powers autonomous driving through three core pillars:

Data Center GPUs: Used by Tesla, Waymo, and Wayve to train AI models for self-driving systems.

In-Vehicle Compute: Nvidia’s DRIVE AGX platform (e.g., Orin chips) runs in cars from Toyota, Mercedes, and GM, handling real-time decision-making.

Full-Stack Solutions: Includes AI training (DGX), simulation (Omniverse), and safety-certified software (DriveOS).

And Jensen announced that GM has selected Nvidia as a partner to build their future self-driving car fleet.

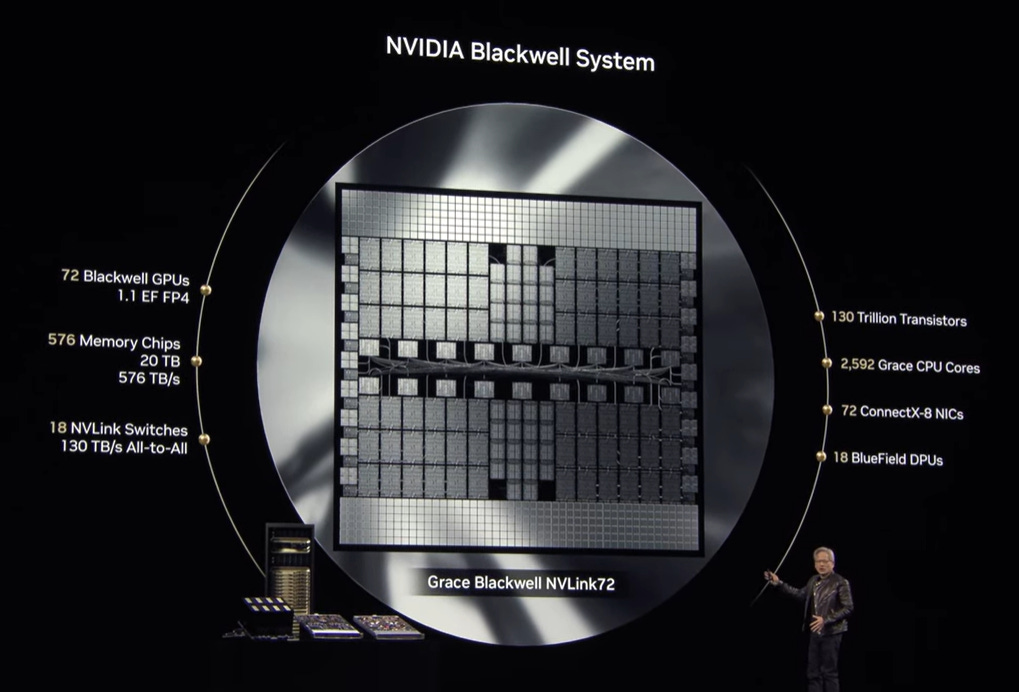

GTC 2025 is no ordinary product launch event that Nvidia and other technology companies used to do each year, even though the next-generation GPU Feymann was announced. People may have picked up information such as “Blackwell is shipping in volumes”, “NVIDIA Dynamo is announced”, or “Everything is liquid-cooling”. It is important to note that Jensen’s keynote took to lengths to explain concepts and messages regarding accelerated computing that requires massive scale-up.

Dispelling doubts about R1 decreasing computing demand

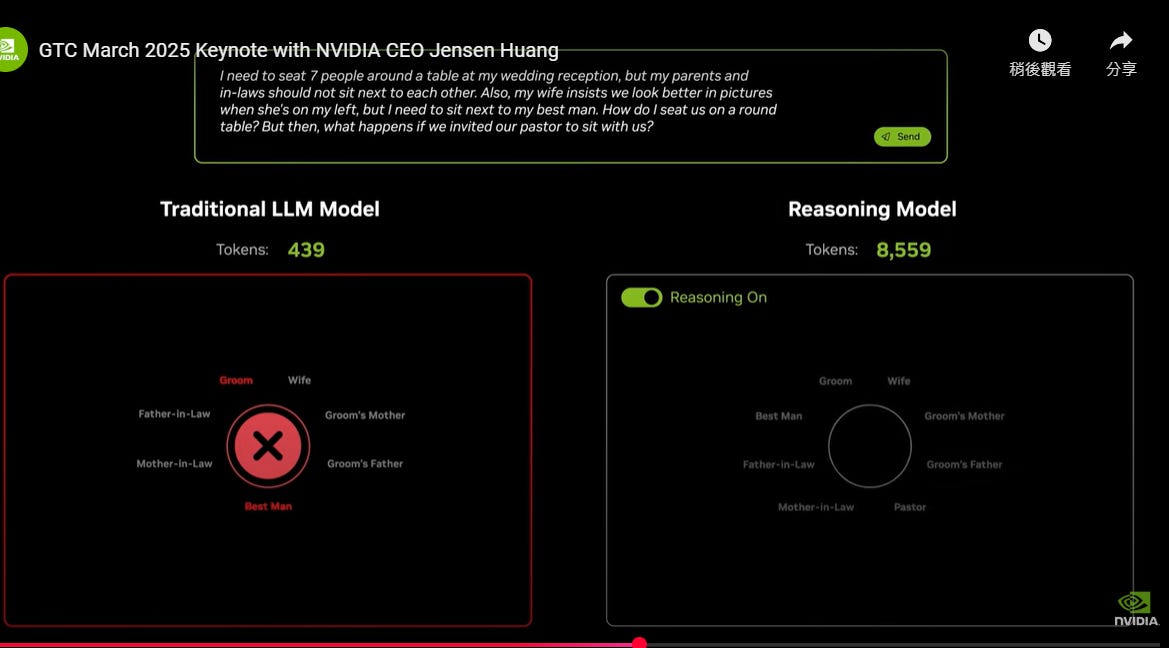

Not surprisingly, Jensen took time to explain why DeepSeek’s R1 inferencing model will boost the demand for computing power instead of decreasing it. He compared a traditional LLM and a reasoning model solving a wedding seating problem.

A traditional LLM attempted to seat 300 wedding guests with constraints (traditions, feuds, photogenic layouts) in a single pass, producing a flawed answer in under 500 tokens.

In contrast, the reasoning model R1 broke down the problem, tested multiple scenarios, and self-verified its solution through iterative reasoning, consuming ~8,000 tokens. While the traditional LLM’s answer was fast but error-prone, R1’s thorough analysis ensured accuracy, though at a higher computational cost. This highlights reasoning models' ability to handle complex, multi-variable tasks through step-by-step logic and self-correction, even if slower and more resource-intensive.

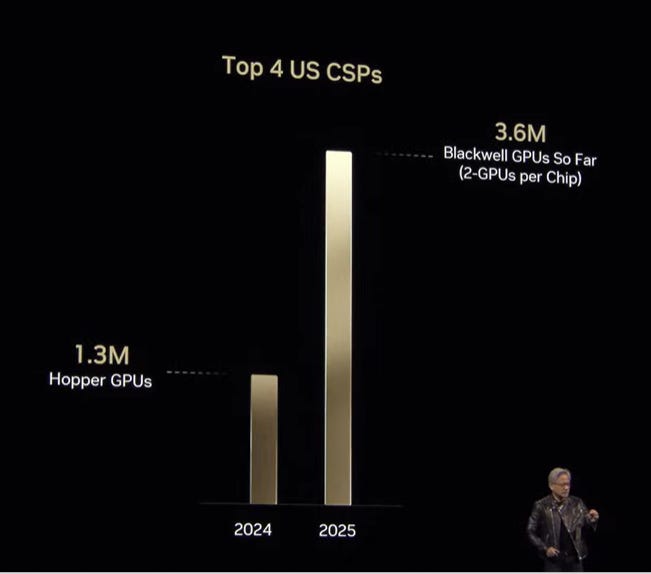

Jensen did not waste time to clarify rumors about customers delaying or cutting orders on Blackwell chips. He used a bar chart showing that the four cloud service providers (SCPs) have placed orders for 3.6 million Blackwell GPUs so far, up from 1.3 million Hopper GPUs purchased in 2024. However, Jensen noted there are 2 GPUs in a Blackwell chip in a bracket. Does that mean we should time two to the 3.6 million so far? Or divide 3.6 million by 2? Well, even if it is the latter, since we are still in March, with current GPU orders already surpassing the whole year in 2024, maybe investors don’t need to worry too much about it.

The Future of Computing: A Shift to AI-Driven Infrastructure

Computing Transition: The world is moving from traditional general-purpose computing to machine learning-based systems using accelerators and GPUs. This shift has passed its tipping point, with significant changes in data center infrastructure.

Generative Computing: Software development is evolving from manually written code to computer-generated content. This means computers will create data (tokens) that can be transformed into various forms like music, videos, or research.

AI Factories: The new data centers, or "AI factories," are designed solely to generate these tokens, marking a fundamental change in how we build and use computing infrastructure. Everything in these data centers will be accelerated to support this new approach.

Optimal efficiency in data processing would be key to striking a balance between the cost of infrastructure scale-up and revenue generation for AI companies. If inferencing is to spark a paradigm shift in accelerated computing, factors such as limitations in Moore’s Law extension and energy constraints have to be taken into consideration.

Therefore, Jensen talks about the need for software optimization to solve problems.

To cope with the massive demand for the future of inferencing computing, Nvidia announced NVIDIA Dynamo, the operating system of an AI factory, and made it open source. “In the future, the application is not enterprise IT. It's agents, and the operating system is not something like VMware. It's something like dynamo, and this operating system is running on top of not a data center, but on top of an AI factory,” said Jensen.

Moore's Law Limitations: Historically, Moore's Law predicted that transistor density doubles every two years, driving computing advancements. However, this law is now facing physical and energy limitations, particularly in terms of power consumption and transistor size.

New Computing Paradigms: The focus is shifting towards more efficient architectures, such as programmable systems that can adapt to diverse workloads. This includes optimizing configurations for different tasks, and leveraging concepts like the Pareto frontier to find the most efficient setups.

Performance Gains: New technologies, such as Blackwell, are achieving significant performance boosts—up to 25 times or even 40 times that of previous systems like Hopper. This is crucial for handling complex tasks and next-generation workloads, especially in AI and reasoning models.

Energy Constraints: Despite these advancements, energy consumption remains a major constraint. Data centers can only handle so much power, making efficient energy use a critical factor in future computing developments.

Jensen argues that traditional methods like Hadoop which rely heavily on scaling out, are insufficient for complex tasks like deep learning due to energy consumption and cost. Therefore, scaling up is a necessary step before scaling out to achieve efficient and cost-effective solutions.

Visions for Applications:

Nvidia's cuLitho, a computational lithography platform, is gaining momentum with significant support from industry leaders like TSMC, ASML, Synopsys, and others. This technology is poised to revolutionize semiconductor manufacturing by speeding up photomask development and enhancing chip design efficiency. In the next five years, it's expected that nearly all lithography processes will be optimized using Nvidia's solutions.

Jensen also mentioned applications such as Para bricks for gene sequencing and Gene analysis, Monies, the world's leading medical imaging library, Earth to multi-physics for predicting and very high-resolution local weather, and CUDA Q for quantum computing.

In the accelerated computing era, liquid cooling systems will replace air cooling, and optical wires will replace copper due to heat dissipation challenges.

Key takeaway: The future of computing is not just about faster chips or more powerful GPUs; it's about creating an ecosystem that supports the exponential growth of AI.

Nvidia's vision for AI factories, where data centers are transformed into ultra-high-performance computing environments, marks a significant shift in how we approach computing infrastructure. The integration of technologies like Blackwell and Dynamo, coupled with strategic partnerships in industries such as automotive and telecommunications, positions Nvidia at the forefront of this revolution.

The journey to this new era of computing is not without its challenges. Energy efficiency, networking bottlenecks, and the need for scalable software solutions are just some hurdles to overcome. However, with innovations like liquid cooling and silicon photonics, Nvidia is tackling these challenges head-on.

AI-driven technologies will transform everything from autonomous vehicles to edge computing networks in the coming years. Nvidia's pivotal role in this transformation is clear, and its GTC 2025 keynote has set the stage for what promises to be an exciting and transformative decade in the world of AI and computing.

If you like TechSoda, please subscribe, share, or comment to give us feedback!