Supply chain sources told TechSoda that NVIDIA has granted its original design manufacturers (ODMs) new authority to choose components and parts for data center servers featuring NVIDIA chips.

This expanded procurement flexibility applies across all server models, including the GB200 NLV72, the H100 series, and the upcoming GB300 series, which is expected to be taped out by year-end and launched in June 2025, according to a source familiar with the matter.

“NVIDIA previously controlled more than 80% of AI chip modules, leaving only 10-15% managed by ODMs,” a supply chain expert explained. “Now, ODMs can freely source materials, components, and parts from suppliers they trust, optimizing performance and cost-effectiveness.”

Major ODM partners of NVIDIA include Supermicro, Gigabyte, Quanta, Foxconn, Wistron, Wiwynn, and Inventec, among others. If the ODMs accelerate adopting their selected components, there is still room to increase their gross profit margin, according to the latest report from Morgan Stanley.

However, another supply chain source noted that NVIDIA still has the final say, as customers must pass NVIDIA’s validation process to secure the necessary GPUs for their servers.

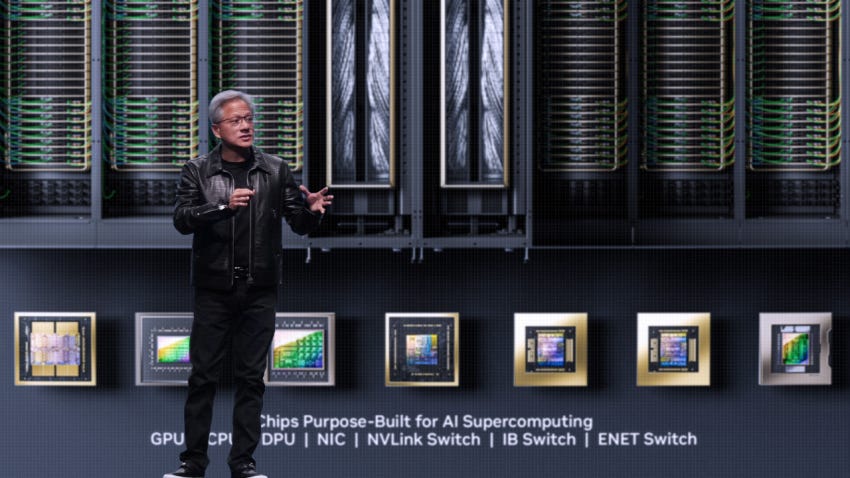

Experts suggest that NVIDIA’s tight control over supply chain procurement in the past was largely due to quality control concerns. “AI servers are costly, and a single error can compromise the entire product,” said one expert. “Additionally, servers generate significant heat during AI training, so NVIDIA prioritized the quality and safety of materials and components over cost.”

According to supply chain sources, an H100 server costs $400,000, while a GB200 NLV72 costs $4 million.

Now that ODMs have gained extensive experience working with NVIDIA, they understand the specifications to ensure product quality and security. “With each ODM offering unique server designs, it makes sense for them to select components tailored to achieve optimal heat dissipation and performance,” an industry expert noted.

Given that servers must run continuously, reliability and durability are critical. During deep learning processes, each GPU temperature can reach 90°C, with performance suffering if temperatures rise too high. Allowing ODMs to select cooling solutions that best fit their designs could improve overall performance and reliability.

This shift may impact NVIDIA’s high gross profit margins, which stand at around 75% as of the latest quarter, as it relinquishes full control over supply chain procurement. “They’ve already made substantial profits on the hardware,” a supply chain expert commented. “With software and applications now crucial for sustainable growth in the AI industry, NVIDIA may see this as a chance to focus more on those areas while leaving hardware decisions to ODMs.”

Resonant to NVIDIA’s latest move, Mike Yang, Executive Vice President of Quanta Computer, in a recent panel discussion at the Taiwan Semiconductor Industry Association (TSIA) annual meeting, emphasized the critical need for enhanced collaboration between IC design and system integrators to accelerate product development. He noted that effective integration demands a deep understanding of circuit board design, power management, heat dissipation, and semiconductors.

Yang pointed out that rack-scale systems are now capable of supporting up to 172 GPUs, with future configurations potentially exceeding 200 GPUs. However, he raised concerns about the challenges posed by such high-density systems. For instance, with networking speeds reaching 800G using Ultra Ethernet, the power consumption per rack could rise to nearly a megawatt. Designing an efficient power rack and effective cooling systems becomes essential when handling these substantial energy demands, with some racks reaching 600-700kW.

Yang stressed that each stage of this process is closely tied to IC design and the advanced manufacturing processes within foundries, therefore the entire supply chain should work closely together, as cohesive effort is essential for driving competitiveness in the industry.